These parameters get bundled in an object.

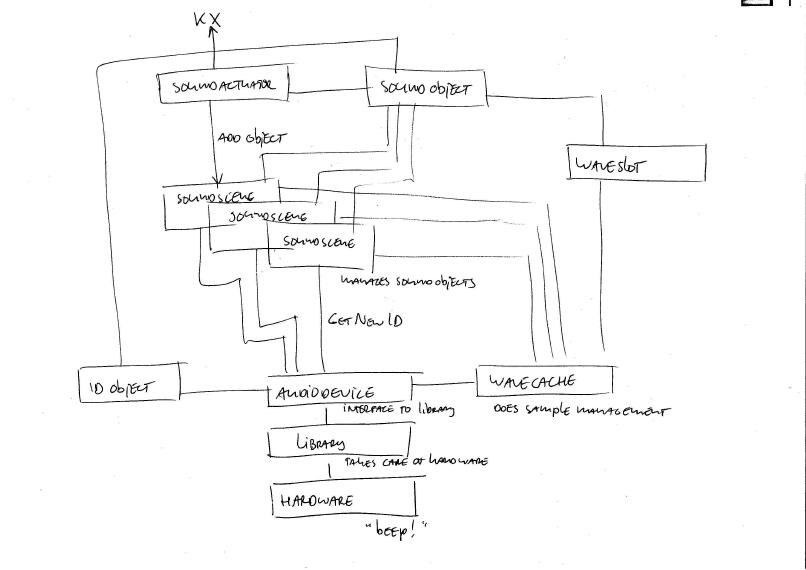

So now we have two kinds of objects: SoundObjects and a ListenerObject. Before we go on, lets make an inventory of all the other objects, classes, device and stuff.

SoundObject (N) - the object belonging to a sound in a space. ListenerObject (1) - the one which can hear the soundobjects. SoundActuator (N) - this is an actuator that takes care of triggering soundobjects with certain parameters.

SoundScene (N) - this scene manages the soundobjects belonging to the soundactuators in a ketsjiscene.

WaveSlot (N) - this is an object to store all kinds of sample related stuff in.

WaveCache (1) - this is where all waveslots get managed.

DeviceManager (1) - this one takes care of setting and getting the right audiodevice.

AudioDevice (1) - this is the interface to the soundlibrary.

Soundlibrary (1) - this is an (external) module which takes care of the hardware side (like OpenAL or FMOD).

Soundobjects must be managed, you want to know if they exist, when they die, track where they're going etc. The soundscenes and the audiodevice take care of that. When using the SoundSystem in Blender you use only one audiodevice at a time ('coz the device does things like samplebuffering in the hardware, and you don't want to share your already expensive and limited resources), but you can use multiple soundscenes. Each ketsjiscene has a soundscene, so if you have multiple ketsjiscenes, you automatically have multiple soundscenes. When a soundactuator gets created in a ketsjiscene, it reports its soundobject to the soundscene. The soundscene then asks the wavecache for the waveslot belonging to the sample which belongs the soundobject. If it gets one (and then knows the sample is loaded) it adds the object to its list of soundobjects.

When a soundactuator gets triggered to play a sound, it tells the soundscene it wants its soundobject to be played. Now the soundscene asks the audiodevice for an ID. This is because there is only one audiodevice so it is a central place to manage the ID's and because the audiodevice manages the resources. It knows what hard/software buffers are free, and if there are none (free), which one to stop and free the ID. The new object gets the new (freed) ID, and is put at the end of the list of playing objects. When another object gets a new ID, that one gets put at the end of the list so the other object gets pushed one position to the front. When this object reaches the first position you know it is the oldest object playing, so when you want to stop a sound you know which one to stop.

Every frame Ketsji iterates through its scenes and calls their proceed-function. With every scene it also calls the proceed of the soundscene. When the soundscene starts its proceed, it first updates the parameters of its listener and then iterates through its list of playing objects to update each one of them. When all are done it kicks the audiodevice to tell it to update the library/hardware.

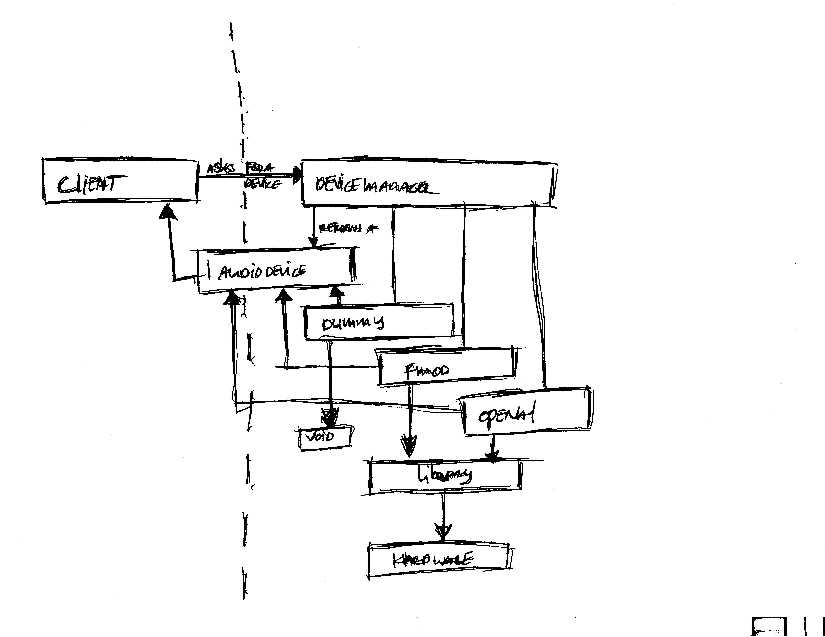

How do client applications get an audiodevice and talk to it?

Well, the only thing the client needs to know is how to talk to an audiodevice. They don't have to know what kind it is or what the output will be. So they ask the devicemanager for a subscription to the audiodevice (for there can be only one). Nothing happens. The devicemanager now knows that someone might want to use an audiodevice. There could already be an audiodevice, requested by another instance or there could be nothing. The client doesn't know and doesn't need to know.

The client can do two things now. It either asks for an audiodevice and the devicemanager will decide what kind it will create. The client doesn't know however what kind was created and just talks to an abstract interface (SND_IAudioDevice). The second thing the client can do is set the devicetype it wants the devicemanager to create, and then asks for an audiodevice. But the client still doesn't know what kind of device the manager created (and it shouldn't know) so it still talks to an abstract interface (SND_IAudioDevice).